Subscribe to the Social Media Lab Podcast via iTunes | Stitcher | Spotify

Should Facebook Ads Traffic be Sent to a 3rd Party Site?

Last year, we started promoting articles reviews of our product written on other blogger’s websites.

Then an idea came to mind – what if we could replicate the same article on our site, then drive traffic from Facebook Ads to it?

This way, we’ll not only get to toot our own horn but retarget all of these people!

Whenever your product gets featured in the media or by an influential person, there is an opportunity to get the word out and establish your brand as an authority.

We believe that these organic mentions (social proof) are one of the best forms of testimonials for any business.

That’s why we consider promoting these articles as one of the parts of our growth strategy.

However, it is difficult to know who has read them.

When you promote these third party articles, you cannot track the people who have clicked on the article unless you used a tool like Snip.ly.

And this makes it impossible for us to retarget these people to sign up for free trials. Yes, that means we’re missing a big opportunity!

So we thought of replicating the article on our site. This would allow us to promote a 3rd party mention of our product, yet track and retarget these people.

To see if there is a difference between both approaches, we decided to conduct this experiment with the following hypothesis:

Hypothesis: There is no difference in results (free trials and subscriptions) between driving traffic to the original feature article and to a replica on our own site.

Testing Facebook Ads Traffic

We designed an experiment to find out if this was indeed a good idea:

- We replicated the original article that ranks Agorapulse highly using Instapage.

- We created 2 Facebook advertising campaigns – 1 for the original article and 1 for the replica.

- We retargeted people who visited our website in the last 30 days.

There are 4 things we want to highlight in our Facebook Ad Campaign set-up:

- The targeting

- The ad

- The original article and replica

- Other settings

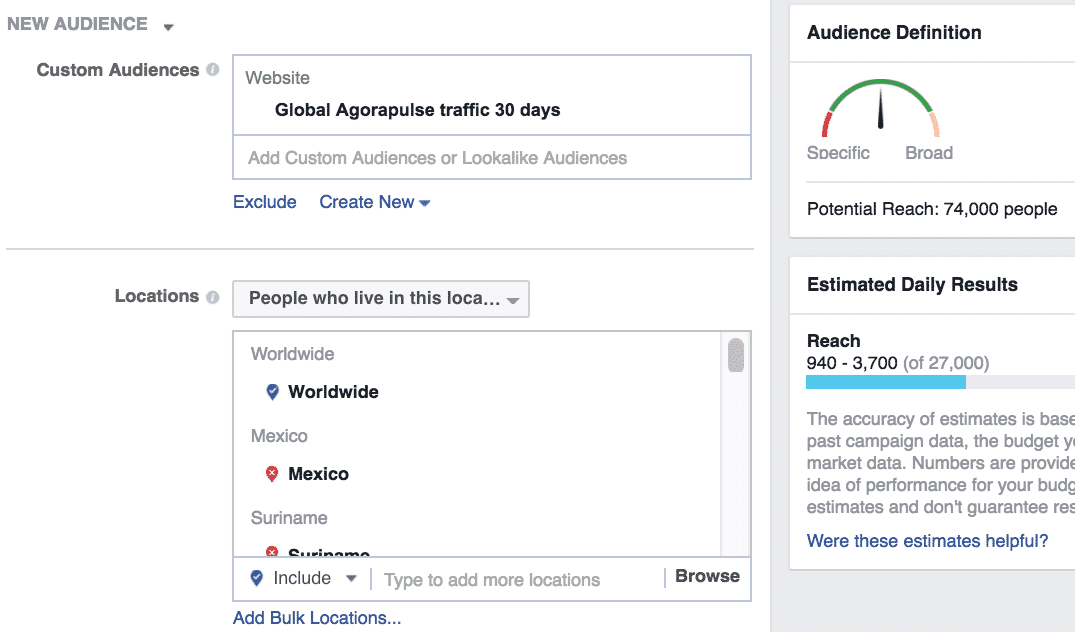

1. Targeting

For this experiment, we wanted to reach people who already know about Agorapulse. So we retargeted people who visited our website within the last 30 days.

We also targeted people worldwide, excluding Spanish-, Portuguese-, and French-speaking countries as these people may find the English post less relevant due to language issues.

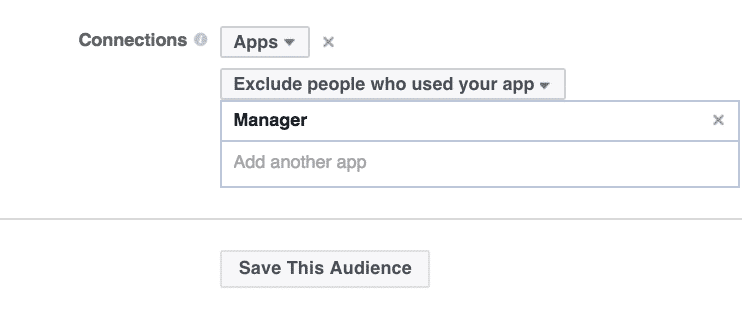

We also excluded the “Manager” app, which includes all free trial users and paying subscribers.

This was done to avoid contaminating the results since we only want to track new free trials and subscribers from the ad.

2. Ad

The ad is simple. Since we are split testing between two different articles, we used the same ad in both Facebook Ad campaigns:

3. The original article and replica.

For the first campaign, we sent users to the original article:

Then we replicated the article and created a second campaign for it:

Both articles have identical content except for 3 things:

- The original article has social sharing buttons (including the social proof of 4.4K shares).

- The original article includes readers’ comments at the end of the article (there were 66 comments in total).

- The replica mentioned the source of the article.

4. Other settings

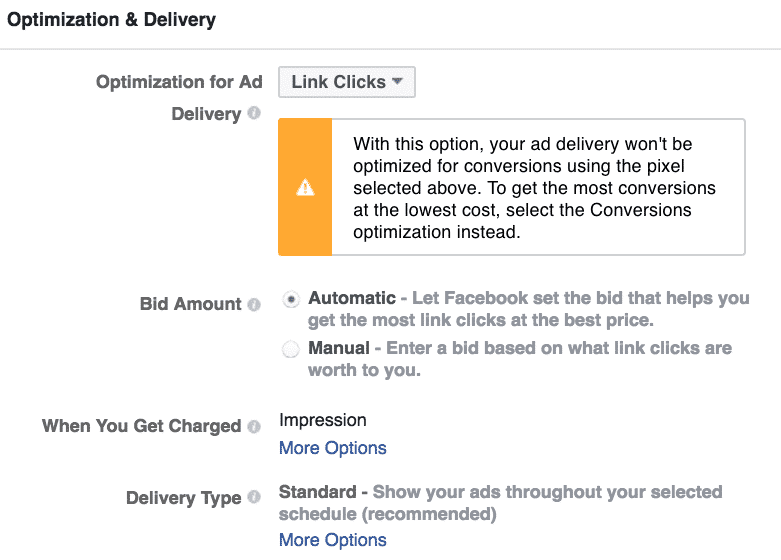

One peculiar thing we did in this experiment was to optimize for link clicks instead of free trials.

Facebook recommends you to achieve a minimum of 50 conversions a week before using the conversion objective.

Since we didn’t fit the criteria, we decided to optimize for link clicks instead.

Apart from that, we used the automatic bidding system and spent $15 a day for each campaign.

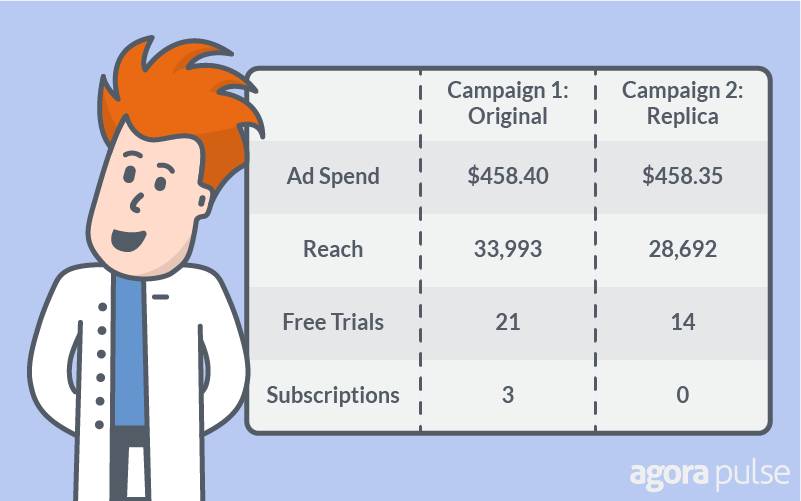

What the Data Says about Facebook Ads Traffic

The original article generated 50% more free trials than the replica.

Not only did the original article generate more free trials, but it was also the only campaign that brought in 3 subscribers of $99/month.

This means that the Facebook Ad campaign will break even in 1.5 months and make profits thereafter. Sweet!

Is the Data Accurate ?

If we ran this experiment or a similar experiment again, would we see the same results?

To find out, we have to see if the results are statistically significant.

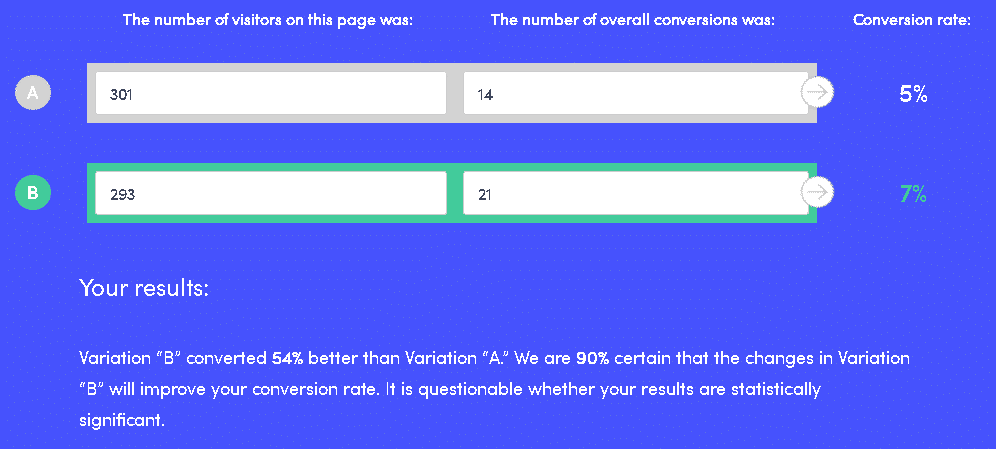

So we entered the number of visits to each site and free trials into an A/B split testing tool (A is representing the replica page, B is representing the original article):

As you can see the original article converted 54% better than the replica.

However, there is a 10% margin of error (100%-90%), which means that the results may not have been statistically significant.

In other words, if we ran the experiment again, there is a 1 in 10 chance that we would not get a similar result.

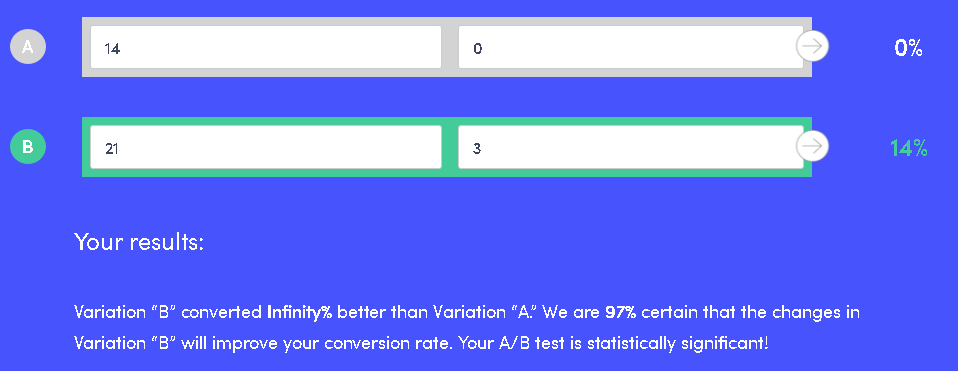

What about the conversion of free trials into subscribers? Would we likely see the same results again if we re-ran the experiment?

We entered the number of free trials and subscriptions respectively and here’s what we saw:

(A is representing the replica page, B is representing the original article)

The results are positive!

This means that if we were to repeat the same test 100 times, we would likely get the same outcome 97 of those 100 times.

Other Insights

Truth is, we thought the replicated article would outperform the original.

It has no menu, no sidebars, and nothing which distracts the reader.

So these results were surprising to us!

But here’s what the scientists had to say:

#1. You lose credibility when you’re tooting your own horn.

In the replicated article, we credited iag.Me, the original publisher.

However, doing so probably suggests to our readers that they’re about to read something that may be potentially biased.

Why else would we be promoting it?

So we may have lost many visitors even before they finished reading the post compared to the original article.

This would explain why fewer people took up free trials – because they did not even get to the point in the article where our product was mentioned.

#2. The social proof on iag.Me may have boosted conversions.

Earlier, I mentioned that one of the key differences between the original and replicated articles was the presence of social proof.

In the original article, you can see that it has been shared 4.4K times on social media!

Social proof is a powerful force that can influence one’s choice.

That’s why literally every new blog tries to promote its articles as hard as it can to look credible.

Compare a 3rd-party article shared over 4.4K times to a replicated article with on social proof, which is more believable?

#3 The results may change…

Although we launched this experiment with the aim of tracking visitors and re-targeting them in the future, we have yet to do so.

Once we do, the results may not be the same.

We may just see far cheaper, downstream free trials and subscribers from the people who visited the replicated site.

All we need to do is to present them with the deal and encourage them to try our product out.

Further Facebook Ads Testing

The results we had from this experiment were encouraging — so we decided to duplicate the same campaign with the original article and launch it as a fresh campaign.

We spent an additional $3,862.62 and generated 163 free trials at $23.70 each (well within our target) and turned 11 of those free trials into paid subscriptions!

The average of those subscriptions was worth $119 a month and the campaign would break even in 3 months and turn profits after that.

To say the least, we are happy with how the campaign is going!

What do you think about this experiment? Is there something you would have done differently?