Subscribe to the Social Media Lab Podcast via iTunes | Stitcher | Spotify

Are Facebook Video Ads Better?

Regarding Facebook video, it was announced that Facebook plans on investing over one billion dollars into its video platform over the next year!

This investment and other recent product releases such as the service ‘Watch’ lend further credibility to advertisers claims that Facebook video ads are costing less, reaching more people, and are generating a better ROI than their counterparts.

In AdEspresso’s Facebook group, we kept seeing posts like the one below.

The social media lab wanted to test Facebook video ads versus other ad formats.

Here’s what we did:

- We used this blog post as the destination of our experiment.

- We created an audience of people who visited our English website in the last 30 days, excluding our trial and paying users.

- We created 2 nearly identical campaigns – one using a video and another using a photo.

In the rest of this post, we’ll share with you the experiment set up, the ads we ran, the results, and our key takeaways.

Testing Facebook Video Ads

In this experiment, we wanted to be very clear on what metrics we would evaluate to determine the success of the test. So, we decided on the three most important attributes in our campaign and measured them accordingly:

- Number of Paying Users

- Number of Free Trials

- Number of Leads

With these goals in mind, this is our hypothesis:

Facebook Video Ads will not get better results than Image Link Ads.

The reason for this is because we continue to see positive results from normal image link ads and while video engagement is on the rise, we are doubtful that the views will turn into meaningful conversions.

How We Set Up The Experiment on Facebook Video Ads

We used AdEspresso to create 2 campaigns: one with a promotional video, and the other with a photo.

Both campaigns linked to the same blog post mentioned above.

— How We Named The Ads:

- Photo link ad: SMLabs – Test 1 – Link ad – Desktop newsfeed – Website conversions

- Video link ad: SMLabs – Test 1 – Video ad – Desktop newsfeed – Website conversions

— Ad Set Settings:

Next, we created 1 ad set in each campaign. In each ad set, the settings were nearly identical:

- Targeting: English website visitors in the last 30 days, excluding trial and paying users

- Budget: $20/day

- Conversion window: 28-day click

- Placement: Desktop newsfeed

Targeting:

To make this test as effective as possible, website visitors should really only see 1 of the 2 ads.

In other words, the person who viewed the photo ad should not be able to view the video ad and vice versa.

Otherwise, the results may be skewed, as there would be some overlap in the audiences.

However, unless you have a HUGE budget to test with, and Facebook’s help, it is very difficult to prevent this from happening.

So, we took the next best option available to us and excluded those who viewed the video for at least 3 seconds from the photo ad set.

By doing this, people who viewed the video would not see the photo ad. Unless of course, the photo ad was delivered to them before they viewed our video ad.

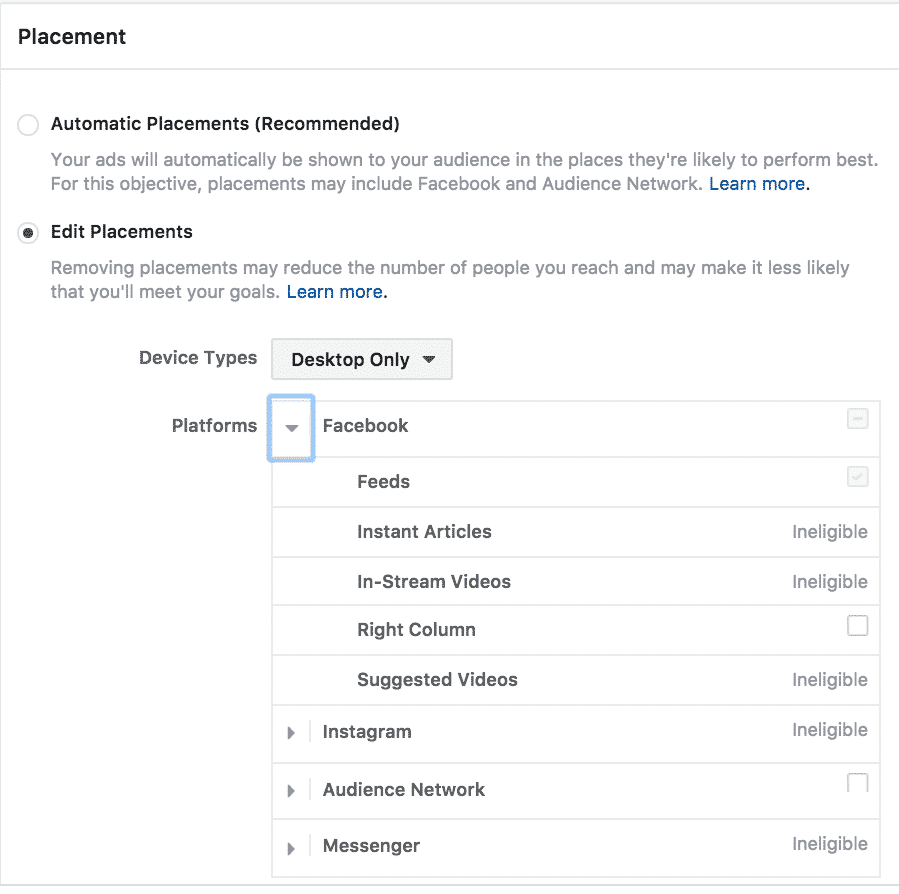

Placement:

As you can see above, we chose to run our ads only on the desktop newsfeed.

This is because visitors cannot sign up for a free trial on mobile devices. So if we ran ads on mobile placements, visitors interested in a free trial would have to visit our website on their desktops and sign up.

The process of going from mobile to desktop makes it difficult for us to track conversions.

Ad Creative & Copy:

To get statistically significant split test results, we only tested a couple of variations and kept the ad copies and other ad elements the same for both.

Here’s what the video link ad looks like:

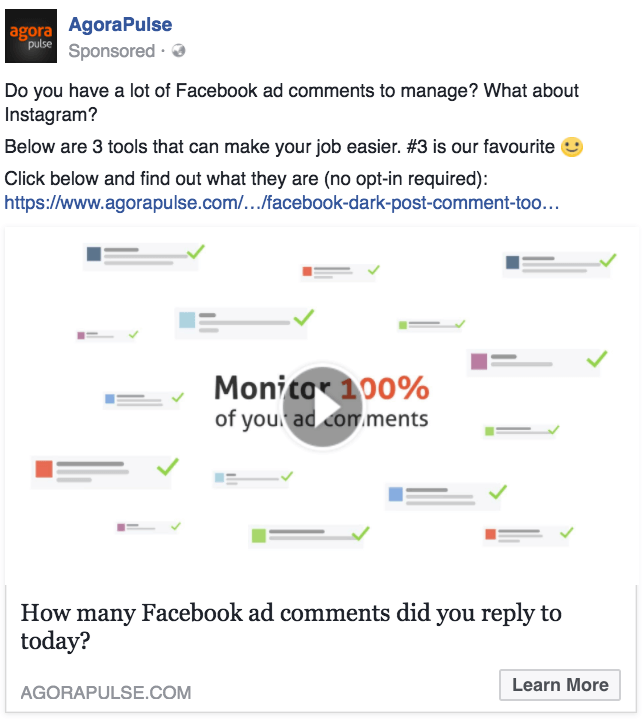

Here’s an example of the photo link ad:

Here’s what the blog post looks like:

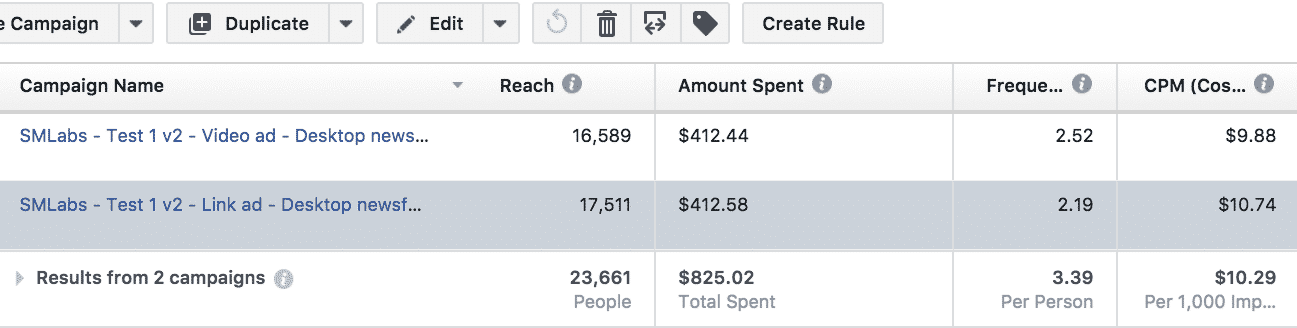

Facebook Video Ad Test Results

The following results were based on a 28-day click and a 1-day view as it is usually best to base your results off a 28-day click attribution.

We picked up 31 new leads and 40 free trials.

The Facebook video ad brought in 26 free trials, while the photo ad only brought in 14.

The video ad was the sole ad that led to a paid subscription worth $199/month.

From the results, it seems like Facebook video ads are working for us!

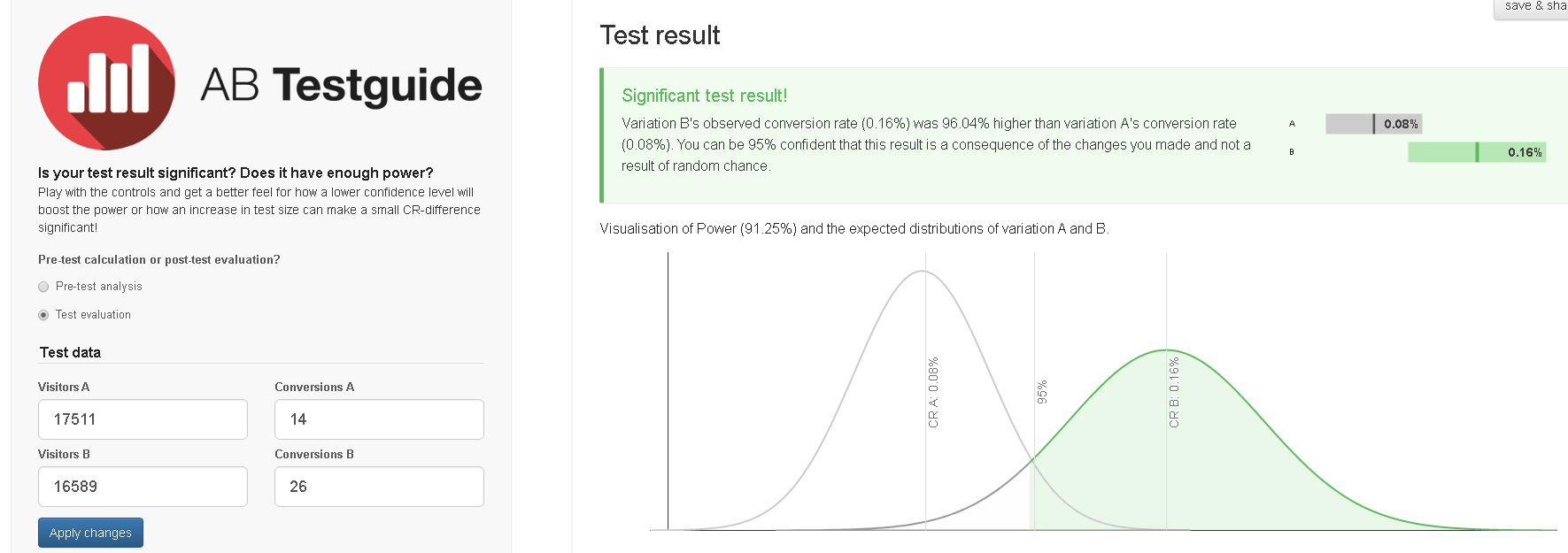

To validate our conclusion that the Video ad outperformed the photo ad we ran it through a statistical analysis formula.

While the 1 signup vs 0 signups is a big deal to us it fails on the statistical testing due to the low number.

But if we analyze the Reach to the number of free trials it is quite significant, as shown below.

Great results that help us conclude that Facebook video ads outperformed.

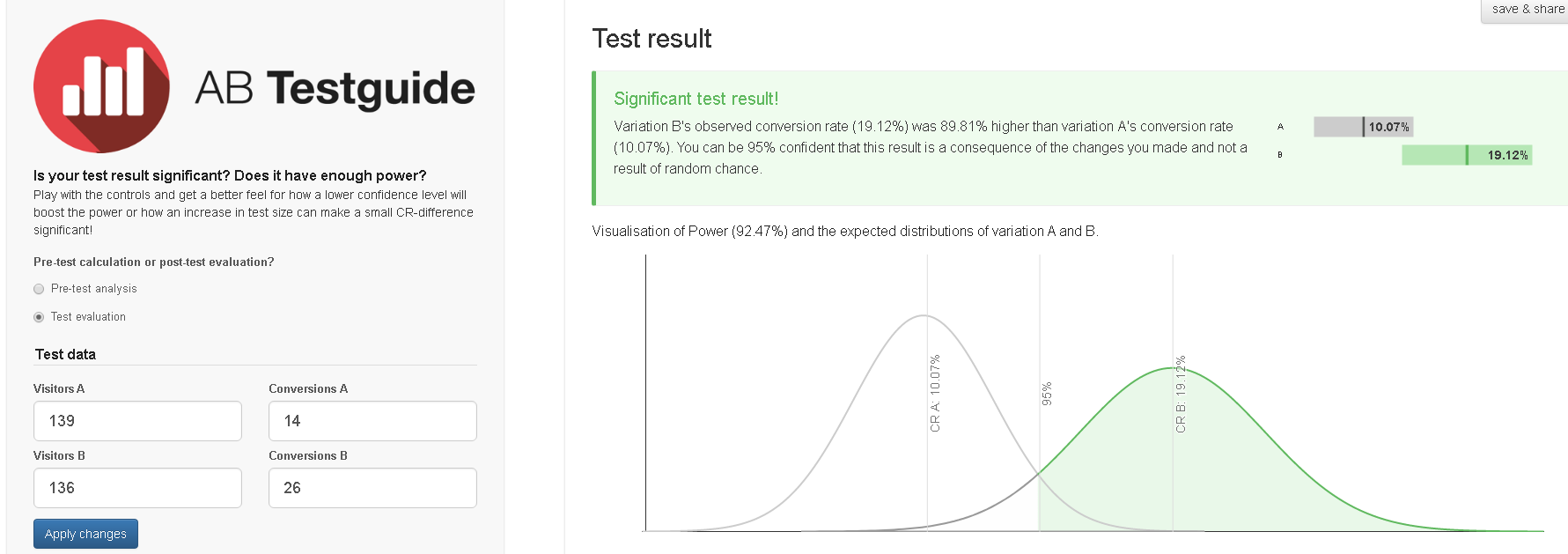

But, just to make sure we’ve poked all the holes in this we can, let’s analyze the number of Link Clicks vs free trials.

Again we find our test results prove that Facebook video ads outperformed ads using photos.

We have confidence moving forward that video ads will result in more free trials and signups.

Lean Towards Facebook Video Ads

From the experiment, we were able to determine that the ad format, video vs. photo, doesn’t really affect the ad performance as much as we anticipated.

But, we’re really glad we ran with it.

The Data we collected allowed us to see Facebook video ads may convert higher for us, and we’d recommend you try them as well.