In this experiment we’ll share with you scientifically proven data on how we got over 50% more clicks on our Facebook Ads!

Why Test Facebook Ads Comments?

I’ve worked with numerous eCommerce companies and one of the problems we constantly faced was dealing with the barrage of comments people were making on our ads.

Prospects’ questions, happy and unhappy customers, spam, and trolls were all over the place.

On an average day, you could receive a few hundred comments. Managing these comments alone required 2 full-time staff members!

It made me wonder if we should even bother managing these comments at all.

Do these comments really matter? Or are we just overly concerned for nothing?

Hypothesis: Facebook Ads with comments will get more clicks.

After thinking further, we came up with a secondary hypothesis:

Secondary hypothesis: The *sentiment* of comments will affect the clickthroughs on your ad.

Our Plan of Attack: How we tested the impact of Facebook Ads Comments

This experiment is designed quite differently from previous experiments. Let’s take a look at our campaign structure first and I’ll explain why.

Campaign Structure

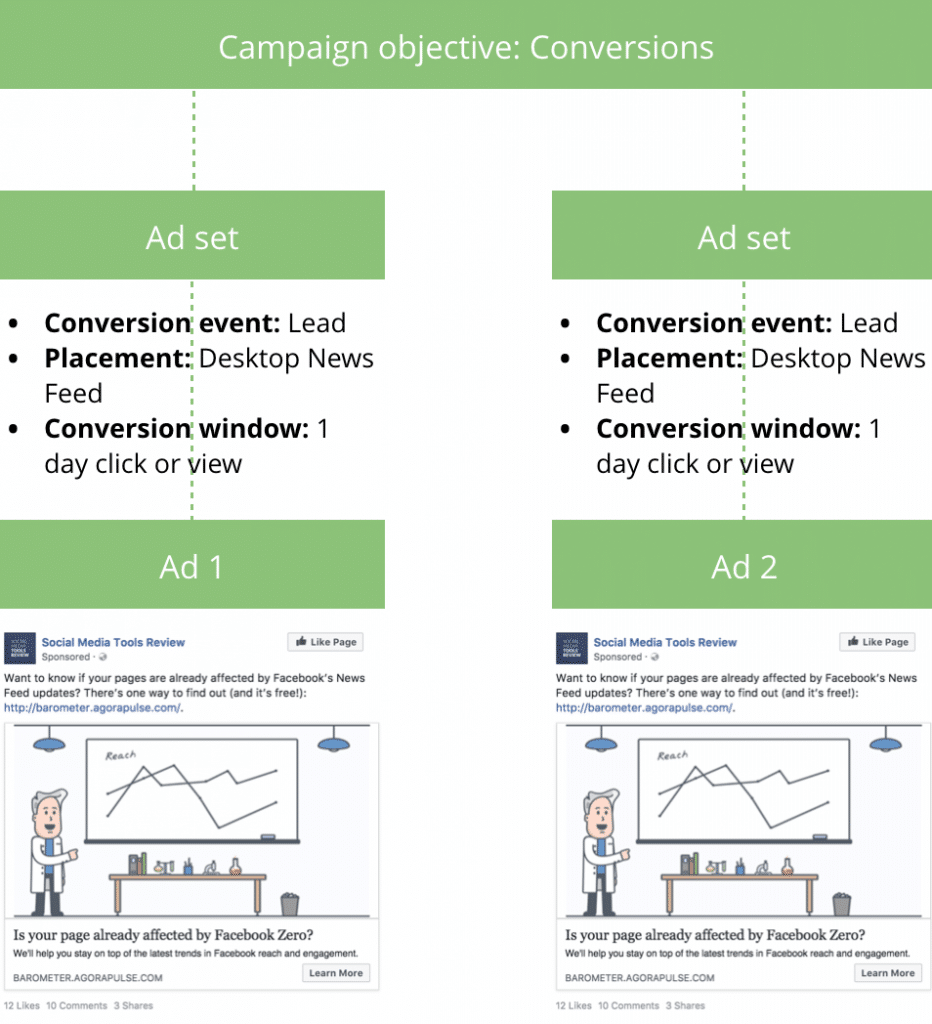

Here’s how we structured the campaign:

As you see, we created one campaign with the conversion objective. In this campaign, there are 2 identical ad sets, each with an identical ad.

Experiment Flow

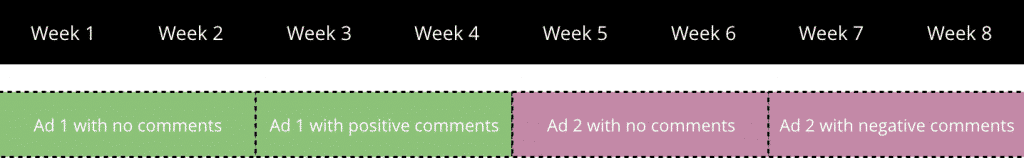

Not only did we want to compare between posts with and without comments (to test the first hypothesis), we also wanted to see if the sentiment of the comments mattered (to test the second hypothesis).

To do this, we made sure of a few things:

- We only targeted people who visited our website in the last 3 days. This is because we wanted to “reset” the audience after completing each stage of the experiment.

- We created 2 identical ads in separate ad sets. One would be used for positive comments while the other was reserved for negative comments.

- The first ad ran for 2 weeks without comments, then we added positive comments and ran it for another 2 weeks. After that, we paused the first ad and repeated the same process. The second ad ran for 2 weeks without comments, then we added negative comments and ran it for another 2 weeks.

This is important because both ads must not run at the same time. If they did, then the same people might see both ads and their decision to click on the ad may be affected by the comments they saw, hence influencing the accuracy of our results.

Targeting and Budget

Besides targeting people who visited our website in the last 3 days, we also excluded people who converted on the ad and our existing customers.

To get a decent data set, we spent $20 per day on each ad.

The Ad

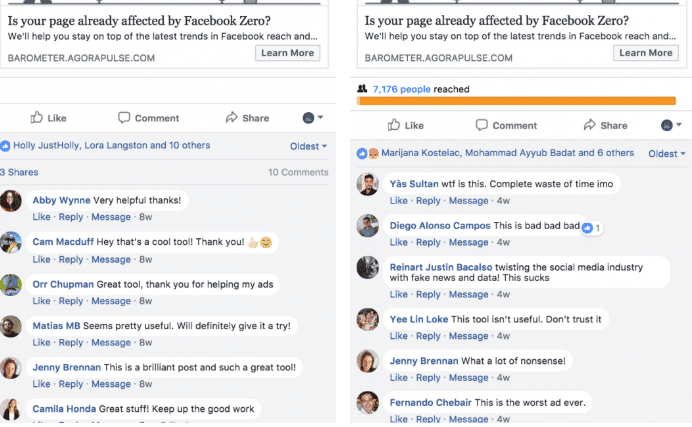

We centered the copy around our popular free tool, the Facebook Barometer, which measures the reach of your Facebook pages.

Test Results

To measure the success of this experiment, we used both CTR (all) and CTR (link).

In case you don’t know, CTR (all) measures the clickthrough rate of all kinds of clicks on your ad, such as clicks on page name, a link in the ad, comments, and more. You can find out everything about CTR (all) in Facebook’s help center.

The reason why we used CTR (all) was because we are not only measuring people’s tendency to click on the ad, but also their tendency to pay attention to an ad in the News Feed.

Hypothesis 1: Facebook Ads with comments will get more clicks.

Did ads with comments encourage more clicks?

Yes and no.

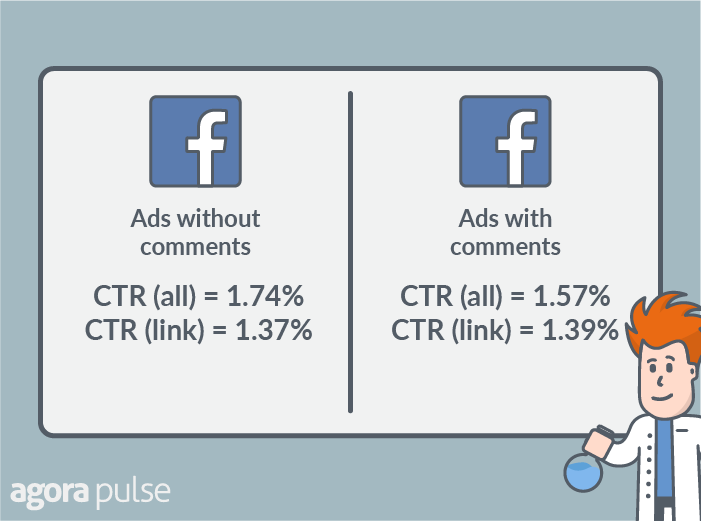

Ads without comments had a 11% higher CTR (all) than ads with comments, but both of them had a similar CTR (link).

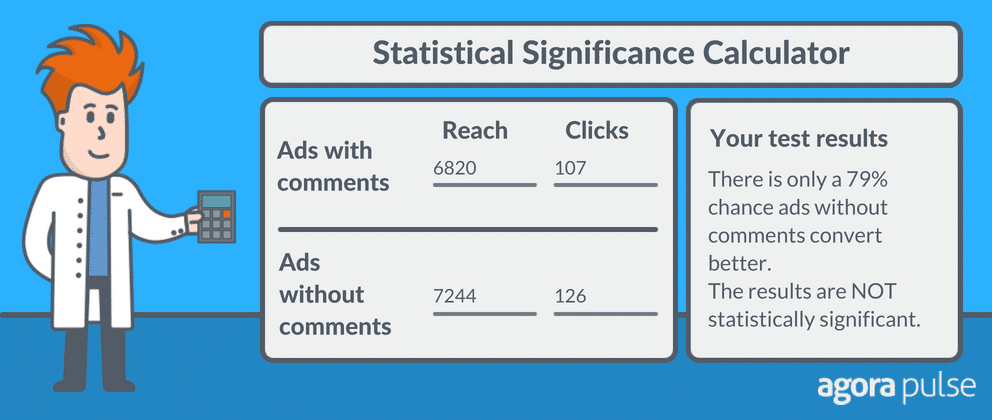

Was the difference in CTR (all) statistically significant? We went to our trusty statistical significance calculator to find out. (Neil Patel has a great calculator on his site if you need to A/B test)

Unfortunately, the results did not pass the statistical significance test.

But all is not lost!! Read on to see how you could get over 50% more clicks on your ads!!

Hypothesis 2: The sentiment of comments will affect people’s tendency to click on your Facebook Ads.

Our ads got a fair share of both positive and negative comments.

Did this make a difference in terms of clickthroughs? Indeed.

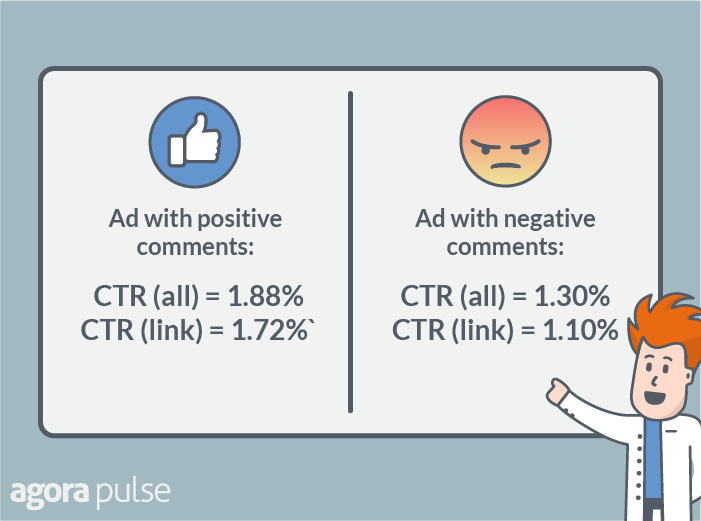

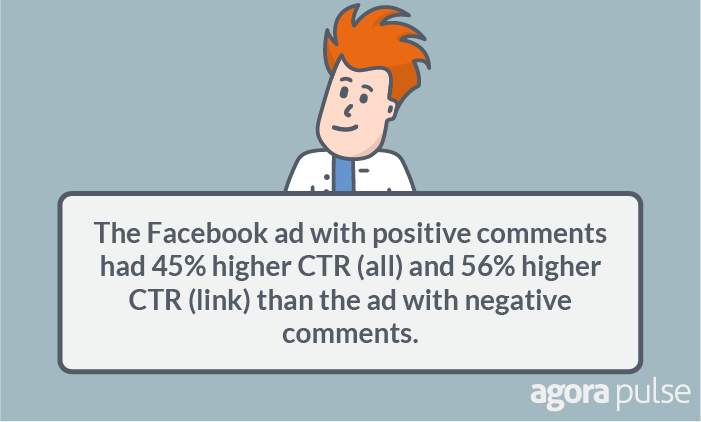

Ads with positive comments received more CTR in both the (all) and (link) categories.

But do these differences pass the statistical significance test?

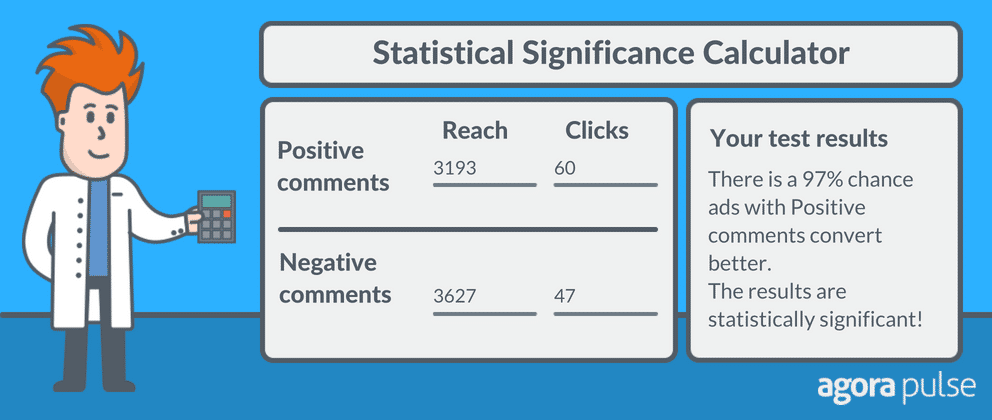

Here are the results based on CTR (all):

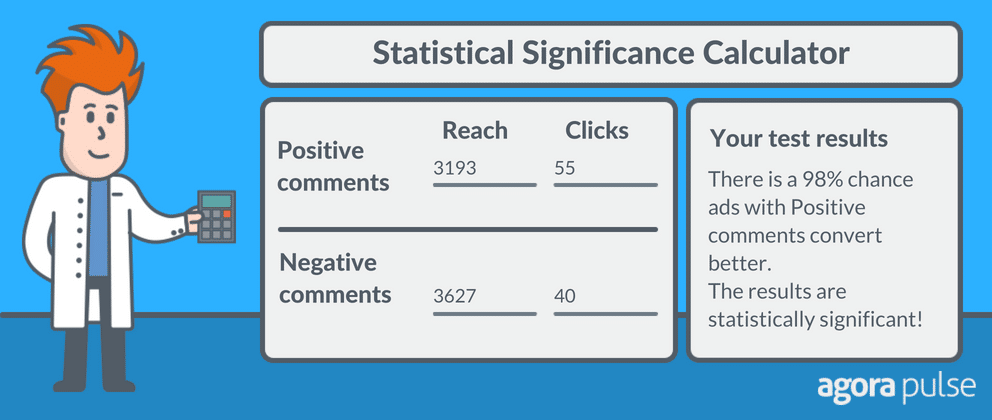

And these are the results based on CTR (link):

Yes, both of the tests passed this time! If we ran this experiment again, there is a 97% and 98% chance we would see the ad with positive comments outperform the ad with negative comments in terms of CTR (all) and CTR (link) respectively.

Conclusion

Here is a quick summary of our takeaways:

- Ads with comments versus without any comments didn’t seem to factor much into clickthroughs.

- We found that positive comments were more likely to get clicks than ads with negative comments.

It would be advised to encourage positive comments (duh) but also consider hiding or removing negative comments so that they do not influence others viewing the ads.

*Hint you can use Agorapulse to manage your Facebook ad comments!!!

Additional Findings from the Test

1. If someone clicked on the ad despite the comments, they’re no more or less likely to convert.

At the end of the experiment, we generated 103 leads (people who signed up for the Facebook Barometer) from 369 link clicks at a conversion rate of 28%.

You would think that people who saw the negative comments were less likely to convert than people who saw the positive comments.

But we found that it wasn’t true!

While people who clicked on the link with positive comments were 20% more likely to try the tool, the results were not found to be statistically significant.

This could be due to 2 reasons. First, our dataset may have been too small with fewer than 100 site visits in each sample. Second, if someone clicked on the ad despite the negative comments, it is likely that they didn’t see those comments or those comments didn’t bother them at all. That would explain the reason why the conversion rates were similar.

2. Time may have been a factor.

When we mapped out the ad performance over time, we found that the ads received fewer clicks as time went by.

The ads would start out strongly in the first few days and fizzle out.

Which made me realize that while the CTRs were holding out strongly, the CPMs were rising over time, so Facebook served fewer impressions and we received fewer clicks.

Quick lesson: CPMs refers to cost per 1,000 mille (impressions), which is how much Facebook charges advertisers showing your ads 1,000 times.

Our takeaway? Make sure we refresh our ads often.

3. Facebook recently gave the app on desktop a facelift and it could have impacted the results of our experiment.

While scrolling through Facebook recently, I realized that ad comments are no longer directly visible on the News Feed.

If you visited Facebook now and tried to look at the comments under an ad, you will be redirected to the post link directly.

In other words, it takes you 2 clicks to see comments under an ad now.

And it also means that some people may not have seen the negative comments on the ads and as a result, the difference between ads with and without comments was less pronounced than we had expected.